About CoPerception-UAV

Coperception-UAV is the first comprehensive dataset for UAV-based collaborative perception.

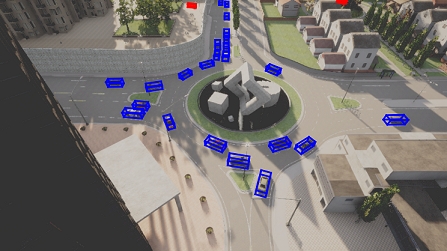

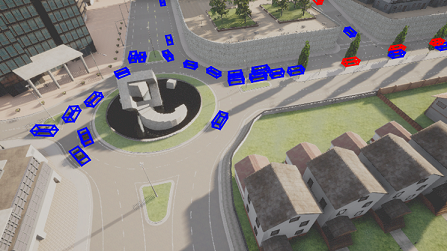

A UAV swarm has the potential to distribute tasks and achieve better, faster, and more robust performances than a single UAV. Recently, planning and control of a UAV swarm have been intensively studied; however, the collaborative perception remains under-explored due to the lack of a comprehensive dataset. This work aims to fill this gap and proposes a collaborative perception dataset for UAV swarm.

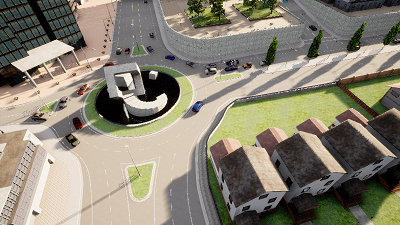

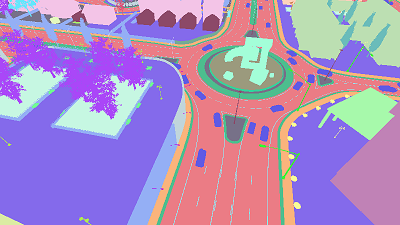

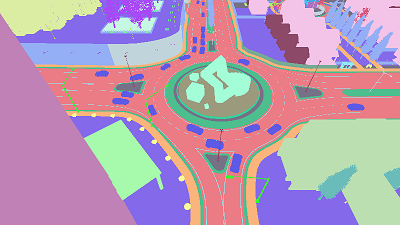

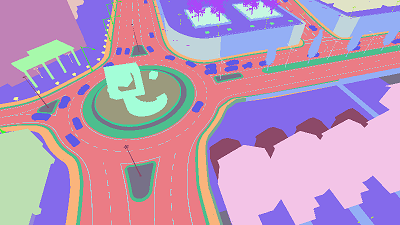

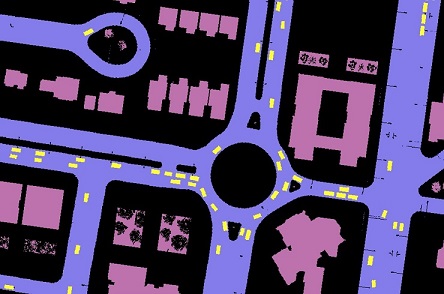

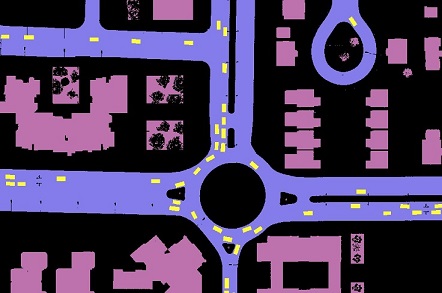

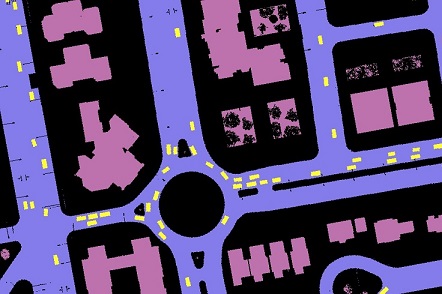

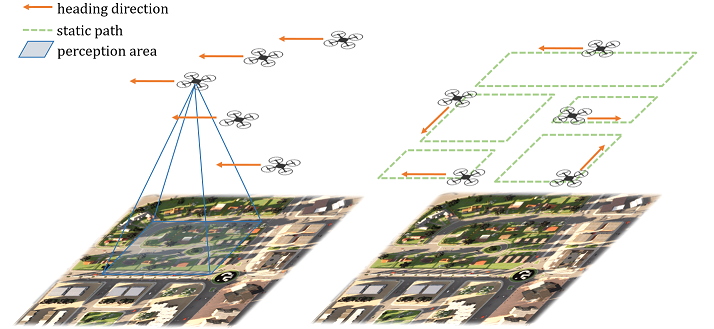

Based on the co-simulation platform of AirSim and CARLA, our dataset consists of 131.9k synchronous images collected from 5 coordinated UAVs flying at 3 altitudes over 3 simulated towns with 2 swarm formations. Each image is fully annotated with the pixel-wise semantic segmentation labels and 2D/3D bounding boxes of vehicles. We further build a benchmark on the proposed dataset by evaluating a variety of related multi-agent collaborative methods on multiple perception tasks, including object detection, semantic segmentation, and bird's eye-view (BEV) semantic segmentation.

Swarm Arrangement

We arrange two types of formation modes for a UAV swarm: discipline mode, where the swarm keeps a consistent and relatively static array, and dynamic mode, where each UAV navigates independently in the scene.

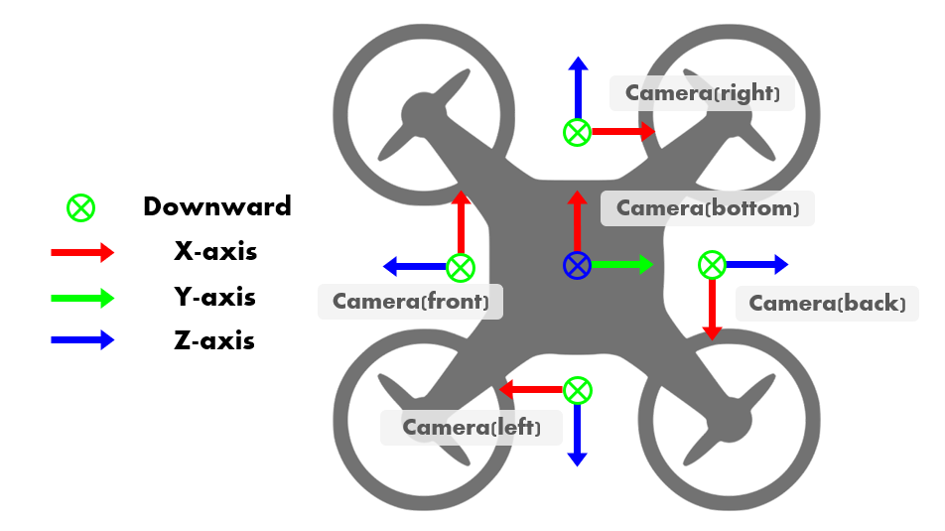

Sensor Setup

In the UAV swarm, Each UAV is equipped with 5 RGB cameras in 5 directions and 5 semantic cameras collecting semantic ground truth for RGB cameras.- 90° horizontal FoV

- 1 bird's eye view camera and 4 cameras facing forward, backward, right, and left with a pitch degree of -45◦

- image size: 800x450 pixels