AM3D-Sim

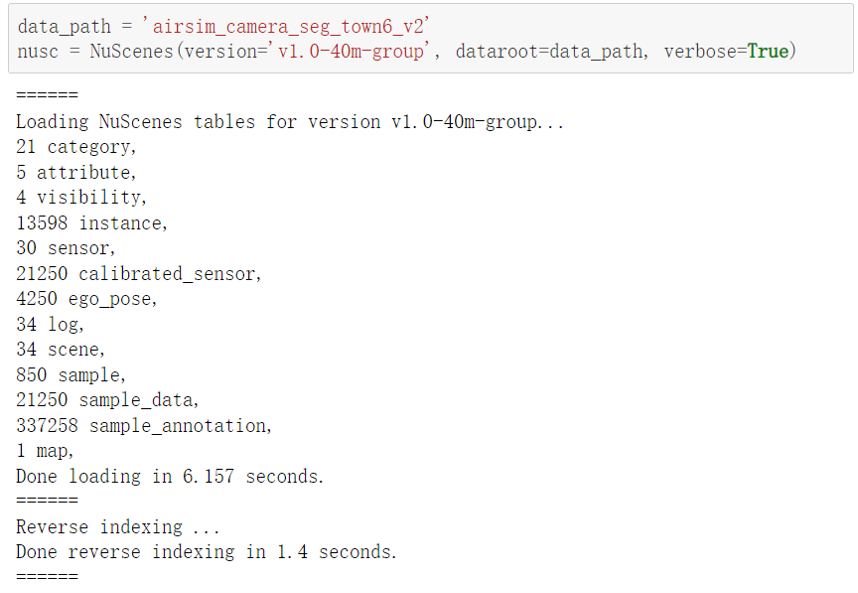

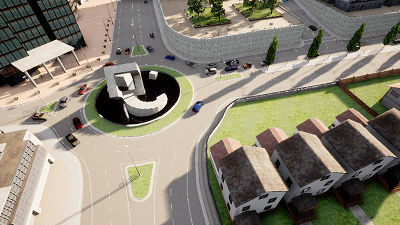

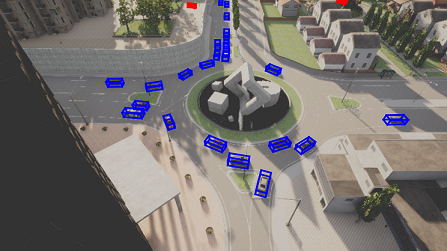

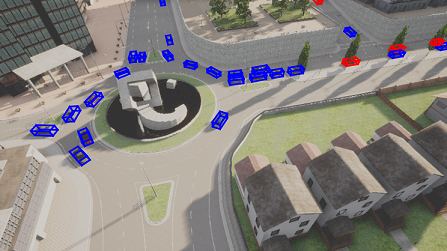

AM3D-Sim is collected by the co-simulation of CARLA and AirSIM. CARLA simulates complex scenes and traffic flow, and AirSIM simulates drones flying in the scene. To promote data diversity, the flying height is set ranging from 40m to 80m, mostly covering an area of 200m x 200m. In simulation, the annotations could be produced autonomously, so we provide a large and diverse simulation benchmark. It contains 3 large towns, with 48,250 images (41,500/6,750 for training/testing) along with 397,984 3D & 2D bounding boxes (347,588/5,0396 for training/testing).

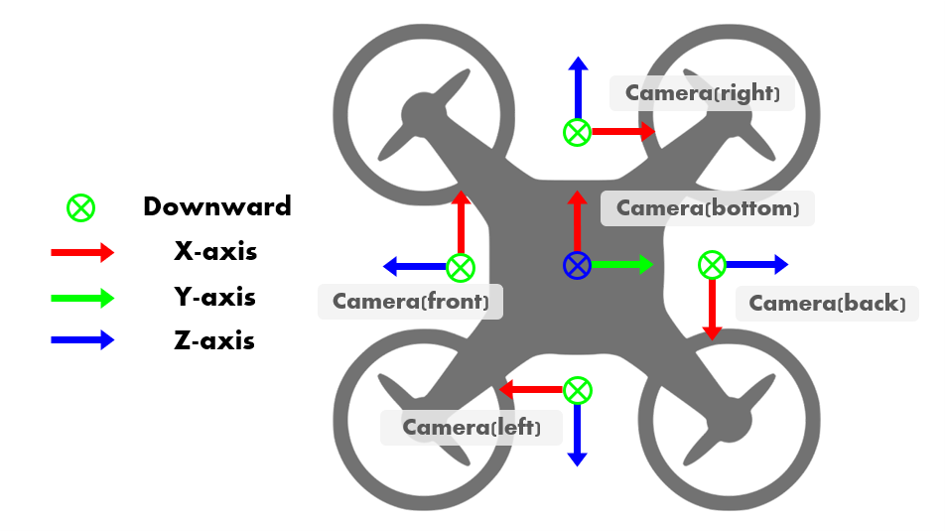

Sensor Setup

In the UAV swarm, Each UAV is equipped with 5 RGB cameras in 5 directions and 5 semantic cameras collecting semantic ground truth for RGB cameras.

- 90° horizontal FoV

- 1 bird's eye view camera and 4 cameras facing forward, backward, right, and left with a pitch degree of -45◦

- image size: 800x450 pixels

Simulation Camera Data

Bounding boxes

3D bounding boxes of vehicles are recorded at the same moment with images, including location (x, y, z), rotation (w, x, y, z in quaternion) in the global coordinate and their length, width and height.

To specifically address the occlusion issue, we also provide a binary label for the occlusion status of each bounding box.

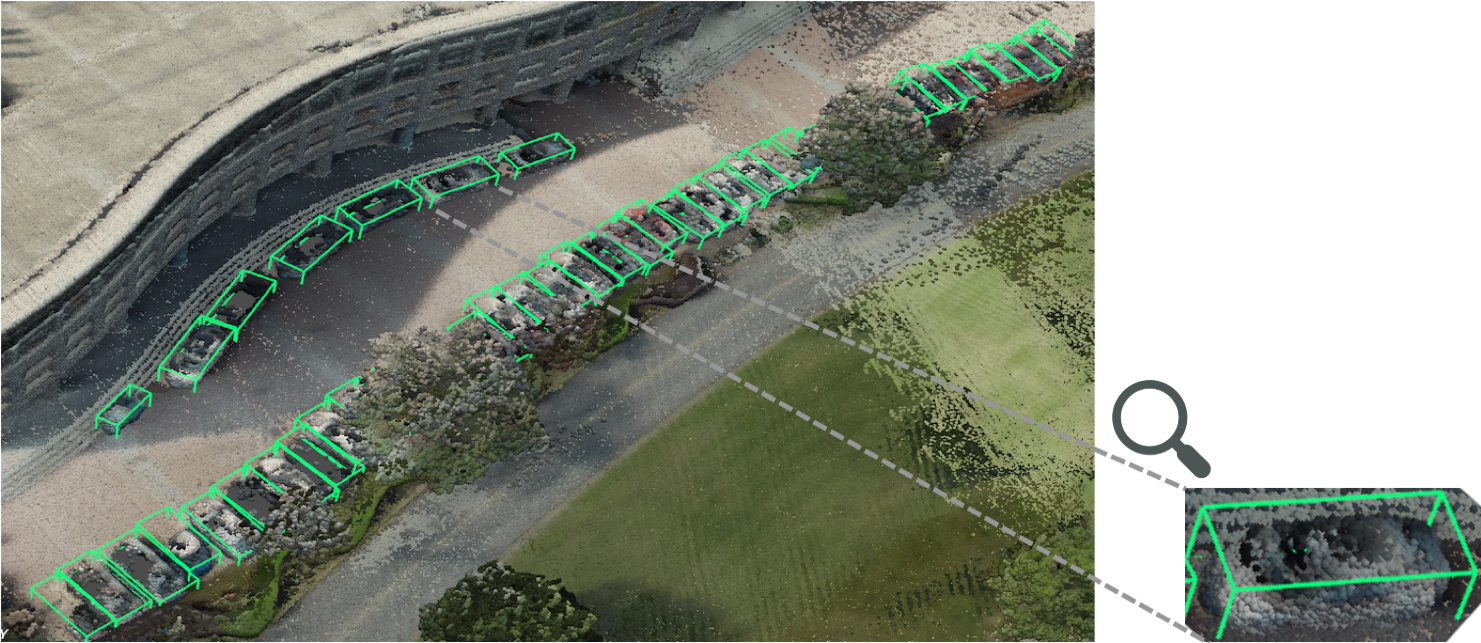

AM3D-Real

AM3D-Real is collected with DJI Matrice 300 RTK flying over the campus. The drone is equipped with a well-aligned LiDAR and an RGB camera. We annotate the 3D bounding boxes in the 3D point clouds collected by the LiDAR and get the 2D boxes by projecting the 3D boxes back to the image according to the calibrated camera project matrix. Due to challenging and costly data collecting and labeling, the flying height is relatively lower, about 40m and the dataset size is relatively smaller. It contains 1,012 images (919/93 for training/testing) along with 33,083 3D & 2D bounding boxes (31,668/1,415 for training/testing).

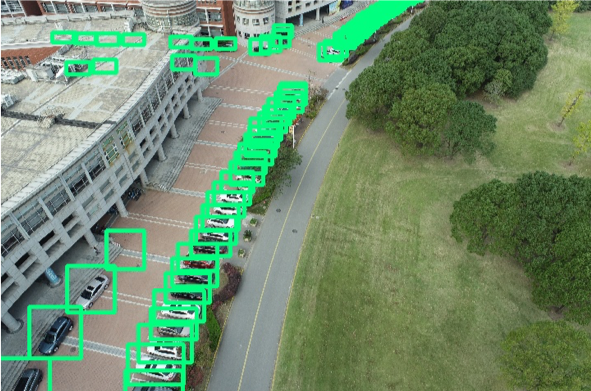

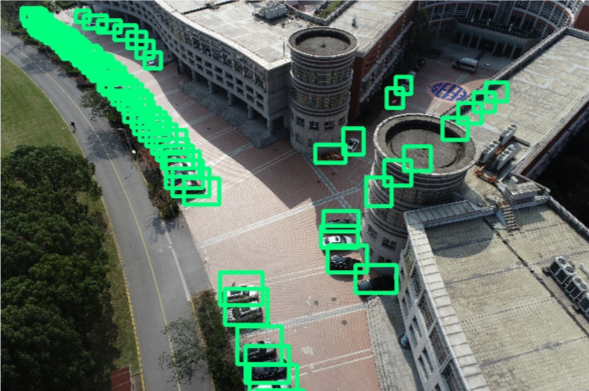

Real-world Camera Data

Real-world LIDAR Data

Bounding boxes

3D bounding boxes of vehicles are annotated in the 3D point clouds captured by the LIDAR, and then projected to the 2D bounding boxes in the 2D images. 3D boxes including location (x, y, z), rotation (w, x, y, z in quaternion) in the global coordinate and their length, width and height.

To specifically address the occlusion issue, we also provide a binary label for the occlusion status of each bounding box.